Background: Hospitalists are tasked with improving care quality across several different inpatient metrics. These include length of stay, readmission rates, health care associated infections, system capacity and patient satisfaction scores. While most quality metrics are driven by systems of care, it can be challenging for frontline providers to address variations in individual practice, if any, in the absence of feedback on their own performance. There is limited data on the means, frequency and content of feedback that should be provided to frontline hospitalist faculty on their performance on quality metrics. We report the structure of a monthly feedback system we set in place at our institution and the change in our quality metrics after we introduced this system of ongoing feedback.

Purpose: The purpose of our feedback packet is to provide ongoing information to faculty on their performance on outcome and process measures along with a reminder on section targets. We also provide a robust peer-comparison to faculty on their performance on these metrics. Such feedback can help faculty self-identify potential areas of workflow and practice improvement, particularly if they are consistently and significantly underperforming in a particular quality domain.

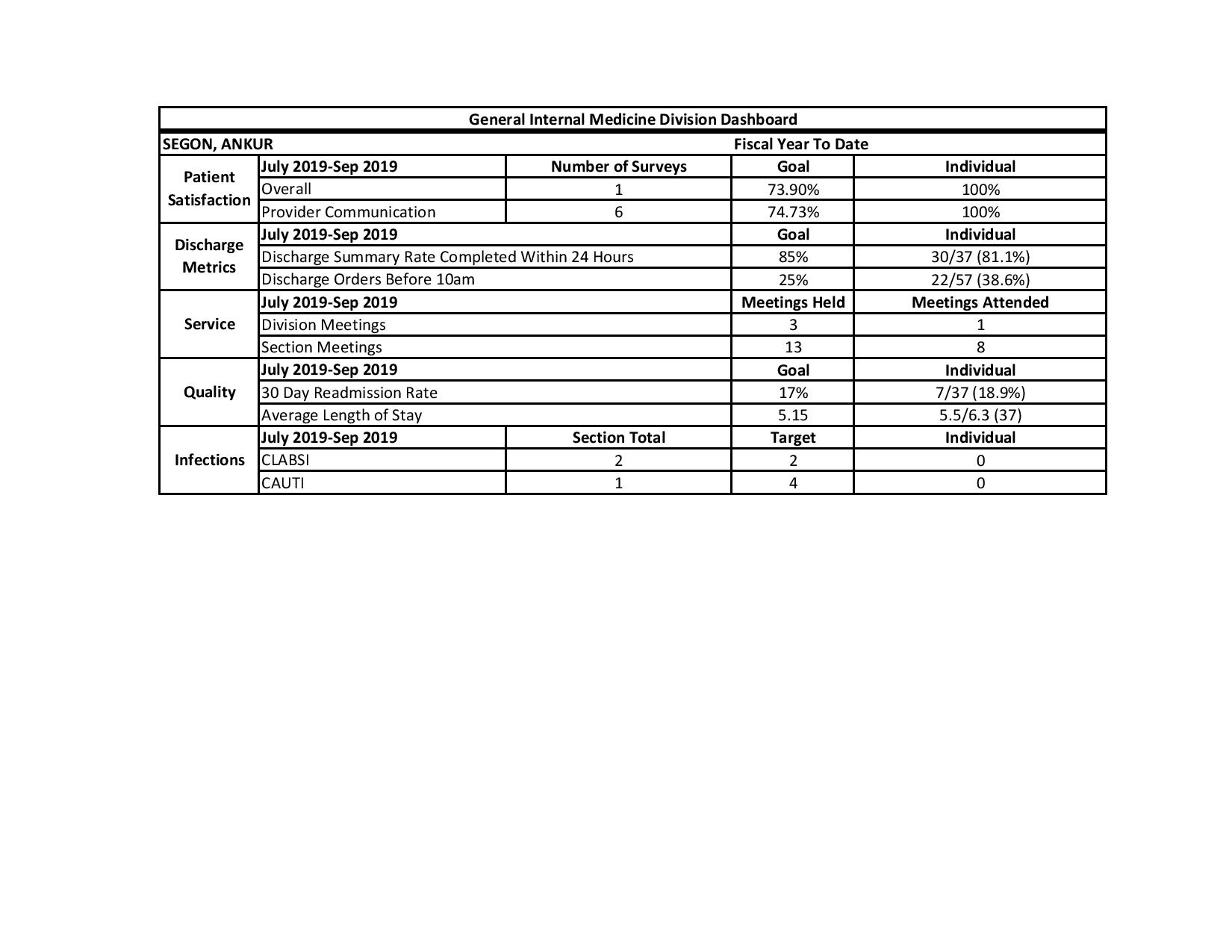

Description: Our section of hospital medicine is made up of 46 full time equivalent faculty members. Every month, each faculty member receives a feedback packet that is made up of an individual dashboard (see figure attached), rank order list and medical record numbers of their 30 day readmissions. The individual dashboard provides section targets and individual performance on length of stay, readmission rate, Healthcare Consumer Assessment of Healthcare Providers and Systems (HCAHPS) scores (overall and provider specific scores), discharge orders placed by 10 am, discharge summaries completed in 24 hours, number of Catheter Associated Urinary Tract Infections (CAUTI), number of Central Line Associated Blood Stream Infections (CLABSI) and attendance at section and division meetings. The rank order list arranges all faculty by performance on HCAHPS scores, readmission rates, length of stay and discharge orders placed by 10 am. Names of faculty meeting quality targets are unmasked along with the name of the receiving faculty member. Names of underperforming faculty are masked. The dashboard is complemented by regular discussion of provider specific tactics to improve quality metrics at section meetings and a monthly newsletter that also lists ongoing tactics. We have seen an improvement in all of our quality metrics in the one year since we instituted this feedback packet (see attached table).

Conclusions: A monthly feedback packet delivered electronically to frontline faculty can be a helpful adjunct to creating a culture of quality in a hospitalist group. We believe this ongoing, peer compared feedback has been critical to improving faculty awareness and consequently engagement in adoption of tactics that can help improve performance on quality metrics. Anecdotal feedback from faculty has generally been positive, although some faculty have been critical of this emphasis on transparency around performance. We plan on continuing to provide this feedback on a monthly basis and adding order set utilization, attendance at care coordination rounds and correct identification of primary inpatient provider in the electronic health record to this feedback packet.