Background: Peer feedback facilitates clinician growth, but obtaining feedback is challenging in inpatient settings where provider teams switch frequently. Often supervisors only hear of extremely positive or negative behaviors. Currently, there is no validated evaluation tool for hospitalist faculty. Historically, our group’s method for soliciting peer evaluations for 180+ staff resulted in 30-40 completed evaluations annually. To enhance response rate, we developed an anonymous, easily accessible survey tool and paired it with purposive sampling distribution.

Purpose: To increase the quantity and quality of peer evaluations completed for hospitalist clinicians at Yale New Haven Hospital.

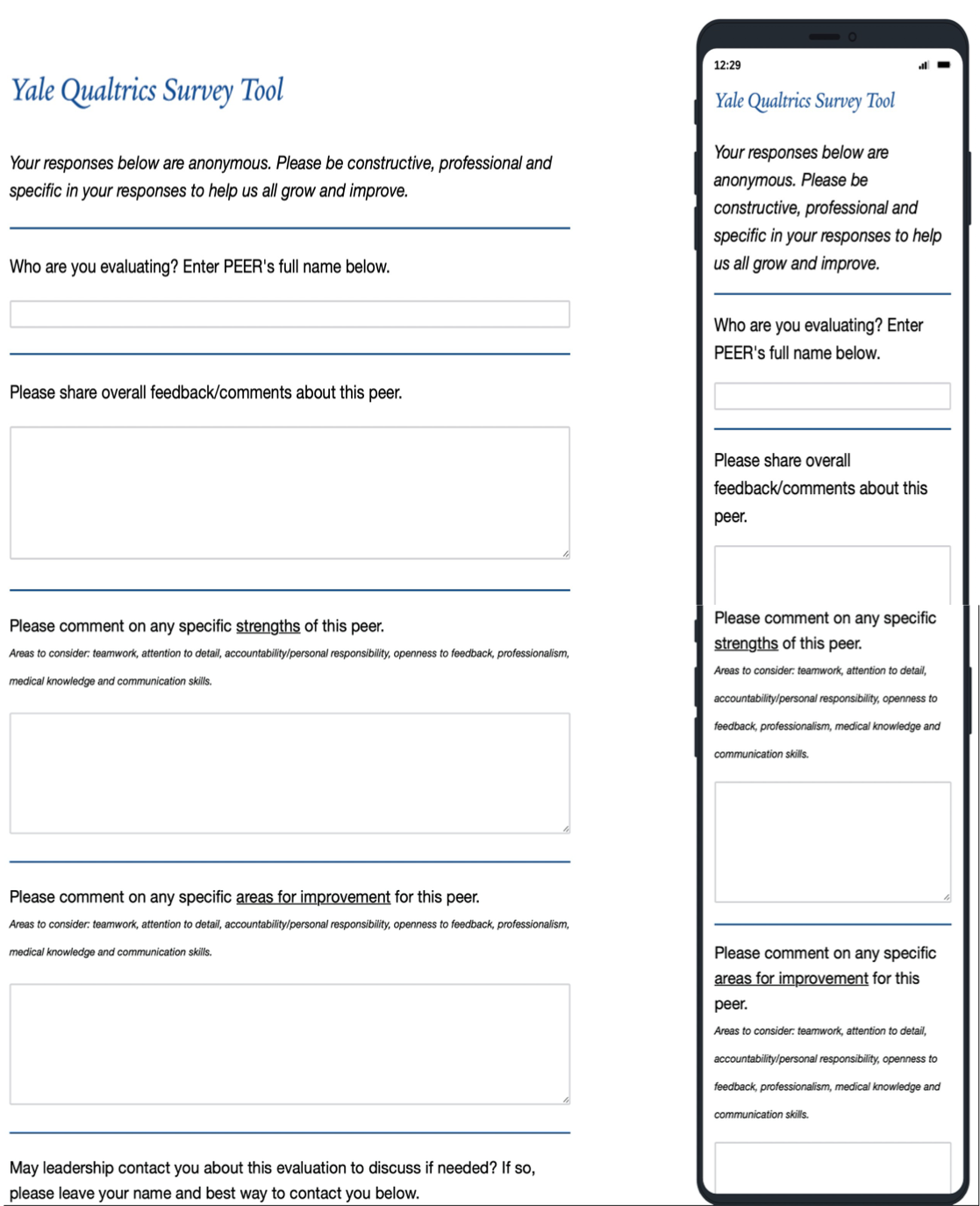

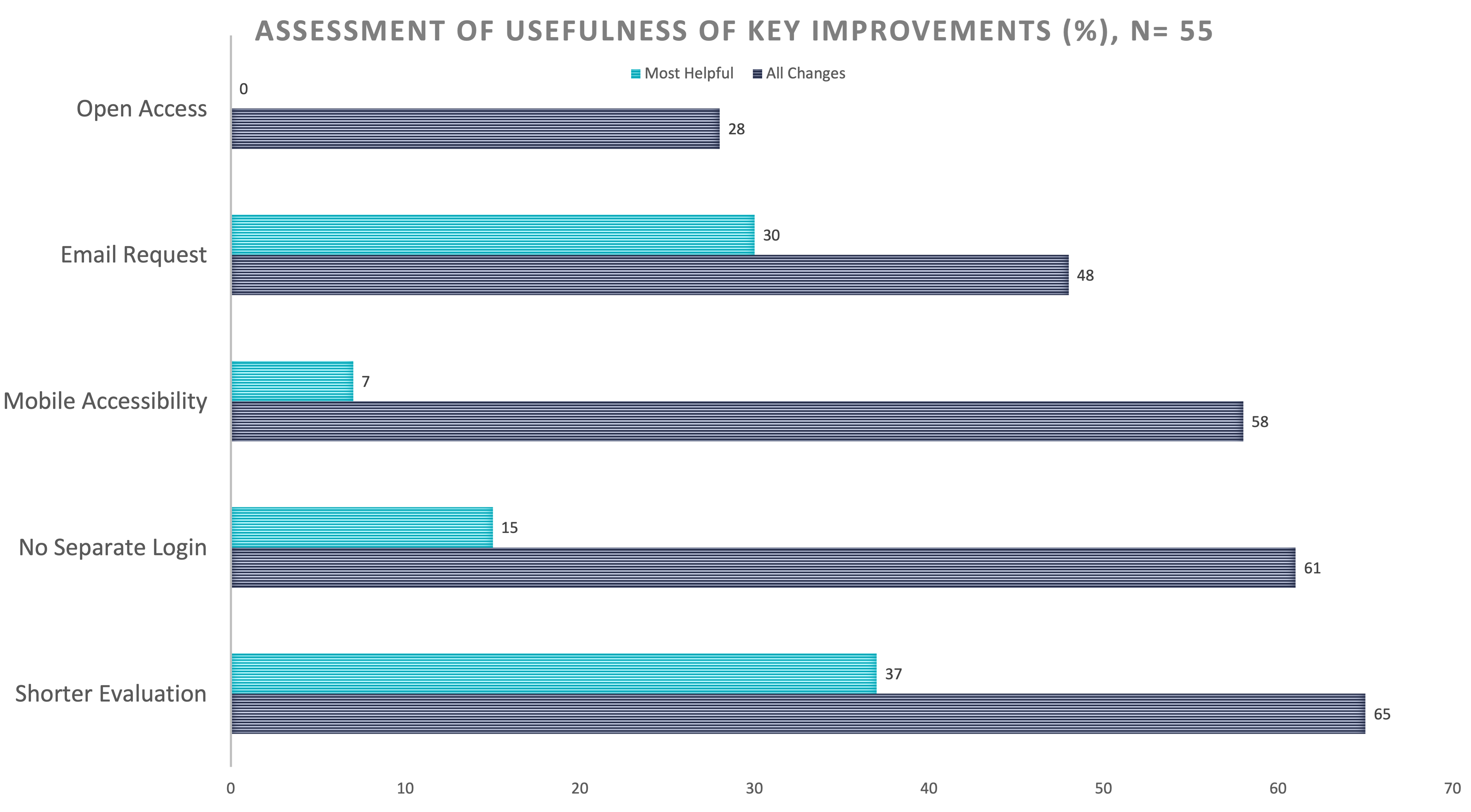

Description: At baseline, we used E-Value software to solicit feedback. This platorm required unique login and 2 steps to initiate an evaluation. Respondents rated colleagues on a simple Likert scale in multiple domains. In PDSA cycle 1 beginning 9/2020, domains were revised to include: teamwork, communication, attention to detail, professionalism, and knowledge sharing, with detailed descriptors for each level. Access to the form was simplified and made available year-round. Response rates remained around 30 forms completed for 2020. In cycle 2 starting 9/2021, the revised form was transitioned to an easy to access, device-friendly survey platform, Qualtrics XM. Response rate was unchanged with 31 forms completed. Most evaluators provided numerical scores but < 10 included text comments.In cycle 3, only qualitative questions were used, as supervisors observed that text comments provided the most actionable feedback. The new form had only 2 fields: provide general comments about a peer and comment on their specific strengths and areas for improvement.Another process change in cycle 3 was purposive sampling: emails from leadership were sent soliciting feedback about a specific colleague. Day clinicians were asked to evaluate an APP or physician they worked with in the past 2 weeks. Night physicians evaluated assigned night colleagues. An open-access email link to the form was also embedded in the weekly team email. After cycle 3 started, 372 responses were collected between Feb and Oct 2022, a substantial increase. Most clinicians had 1 or more evaluations about them, including 62 of 65 day physicians and 63 of 66 day APPs, as well as 40 of 54 night physicians. Fifty-five staff who completed the new evaluations were polled about the PDSA changes. They were asked to identify ALL of the changes that improved their completion rate. Sixty-five percent of respondents identified the shorter form, 61% identified no unique login required, 58% identified accessibility from all devices, 48% identified direct email request from leadership, 45% identified evaluation request about a specific peer, and 28% identified continual access to the evaluation. When asked about the SINGLE most important change made, 37% responded shorter evaluation form and 30% responded that it was the direct email request from leadership.

Conclusions: Implementing a targeted, short form peer evaluation tool via device-friendly platform and using individual email solicitations substantially increased the volume of peer feedback. End users named nearly all the changes made as positive factors contributing to their completion rates, and identified the shorter evaluation form as well as the direct email request as the key reasons for completion of this evaluation tool vs. prior versions. Further investigation into impacts on clinician practice is needed.