Background: High quality clinical documentation is essential for patient safety. Thoughtful clinical documentation transmits one’s clinical reasoning and is considered to be a professional responsibility. There are no accepted standards for assessing documentation with respect to clinical reasoning. We therefore undertook this study to establish a metric to evaluate hospitalists’ documentation of clinical reasoning in the assessment and plan (A&Ps) section of admission notes.

Methods: This was a retrospective study reviewing admission notes of hospitalists at three hospitals between January 2014 and October 2017. Admission notes were included for patients hospitalized with a diagnosis of either fever, syncope/dizziness, or abdominal pain. A total of 1130 admission notes were identified randomly; notes were excluded if they were not on the hospitalists’ service, or if the diagnosis had been confirmed in the emergency department. So as to sample the notes of many providers, no more than 3 notes written by any single provider was analyzed. We developed the ‘Clinical Reasoning in Admission Notes Assessment & PLan’ (CRANAPL) tool to assess the comprehensiveness of clinical reasoning documented in the A&Ps of admission notes. The tool was iteratively revised during pilot testing; ultimately two authors scored all A&Ps using the finalized version of the CRANAPL tool. These authors also assessed each A&P using single item broad ratings: a ‘global clinical reasoning’ and a ‘global readability/clarity’ measure. All data were deidentified prior to scoring. Content, internal structure, and relation to other variables validity evidence were established.

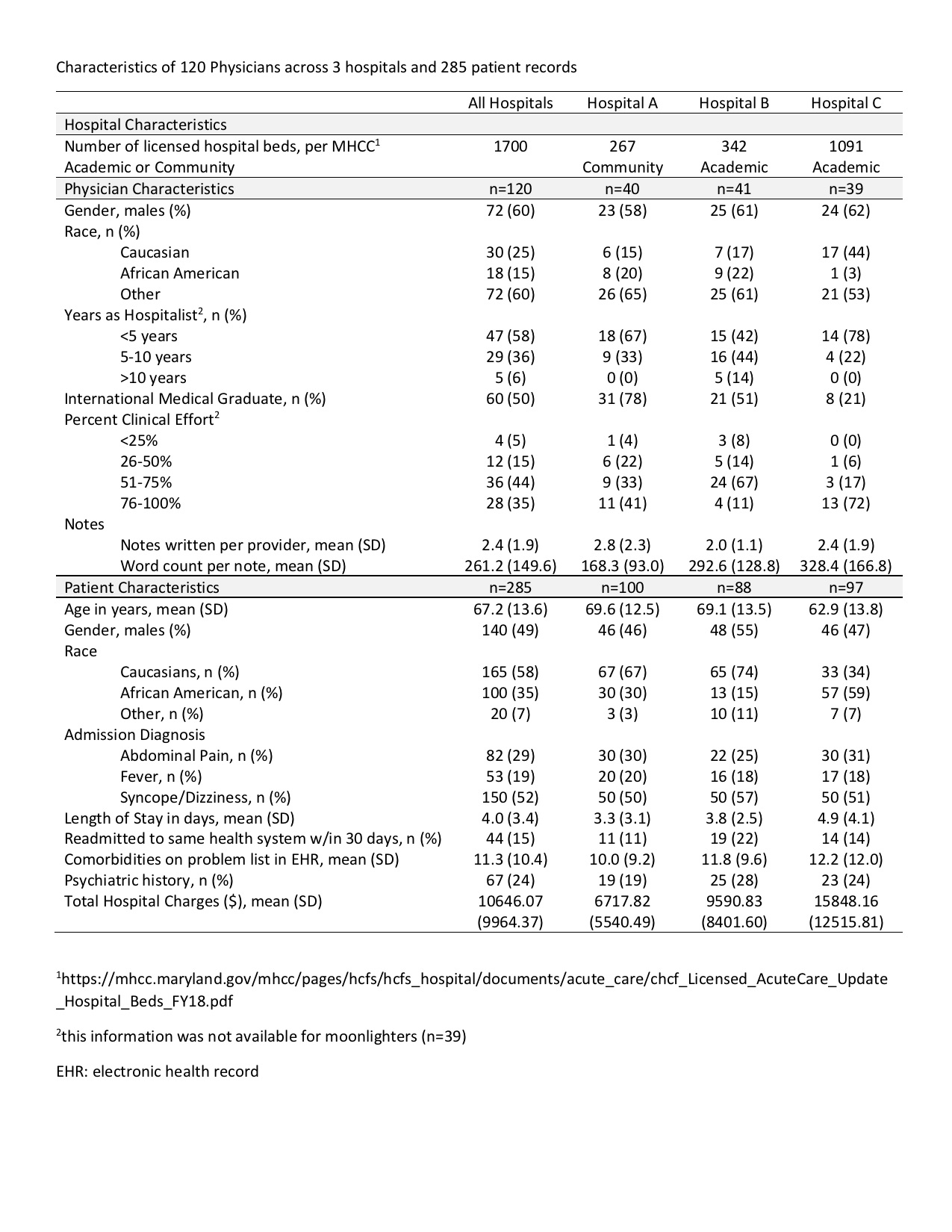

Results: Table shows the total number of hospitalists and number of admission notes that were evaluated. The nine-item CRANAPL rubric includes elements for problem representation, uncertainty, differential diagnosis and plan, as well as items related to length of stay and disposition plans. The mean score for both raters for the total CRANAPL score was 6.4 (SD 2.2), and it varied significantly between hospital sites (p<0.001). The ICC measuring inter-rater reliability for both raters for the total CRANAPL score was 0.83 (95% CI 0.76-0.87). Associations between CRANAPL total scores, global clinical reasoning, and global score for note readability/clarity were statistically significant (p < 0.001). In multivariate regressions after adjusting for covariates, higher scores on the CRANAPL tool were seen among American Medical Graduates as compared to International Medical Graduates (p<0.05).

Conclusions: This study represents a first step to characterize clinical reasoning documentation in Hospital Medicine with real patient notes. With validity evidence established for the CRANAPL rubric, it can be used to assess the documentation of clinical reasoning skills of hospitalists and for providing feedback. This is an essential step to improve the diagnostic process and reduce medical errors.