Background: Length of stay (LOS) is a key metric that hospitals follow closely for quality and operational purposes. Hospital Medicine groups frequently use LOS to evaluate their providers and to identify opportunities for improvement; however, the most appropriate methods to apply specific hospital encounters to individual providers is debated. Complex schemes of weighted Observed to Expected (O-E) LOS metrics have been proposed, but require significant computation efforts (1). Attending or discharging provider attribution is more frequently used, but has significant methodical flaws in attributing patients with prolonged length of stay involving multiple providers. A simplified measure of LOS using the ratio a provider’s progress notes to discharge summaries, known as Discharge Efficiency (DE), has been anecdotally reported on (2). The aim of the study is to compare multiple attribution methods for LOS to determine if there is significant correlation between measures and if DE is a viable metric.

Methods: The study was conducted in a large hospital medicine group based in a single academic, urban, tertiary referral center. Inpatient encounters were reviewed from May 2018 through May 2019. Provider level data on LOS for Observed to Expected (O-E) based on attending, billing, and discharging attribution schemes was obtained from curated hospital data sets. Progress and discharge summary note information was obtained directly from the electronic health record. The analysis included providers with the group for at least 10 months during the study period. Median and interquartile range (IQR) values by provider were calculated for shifts, notes, and discharges. In order to account for the effect of service line workflow and patient severity on LOS, discharge LOS and DE LOS were adjusted based on proportions of provider shifts on specific service lines and the average LOS of each metrics of the service. For each metric, providers were ranked within the group. Comparison of both raw LOS values and group ranks were plotted between two metrics and the coefficients of determination were calculated. Comparison of provider metric rank were performed before and after service adjustment using Friedman’s test.

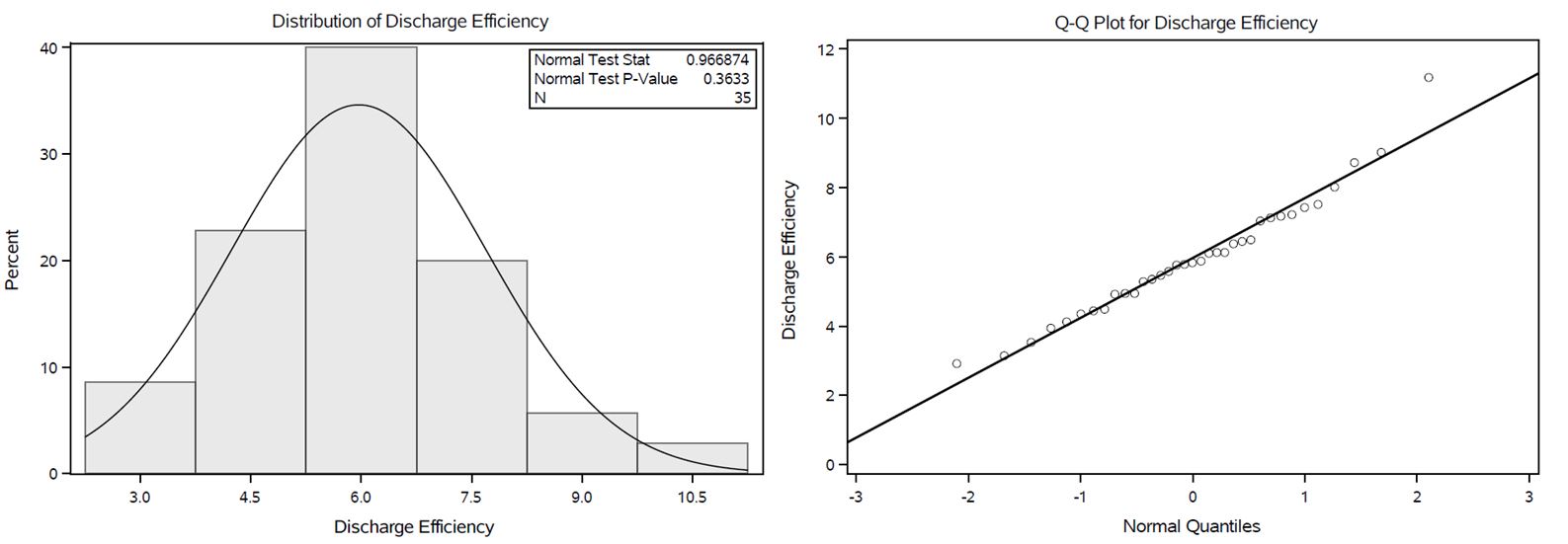

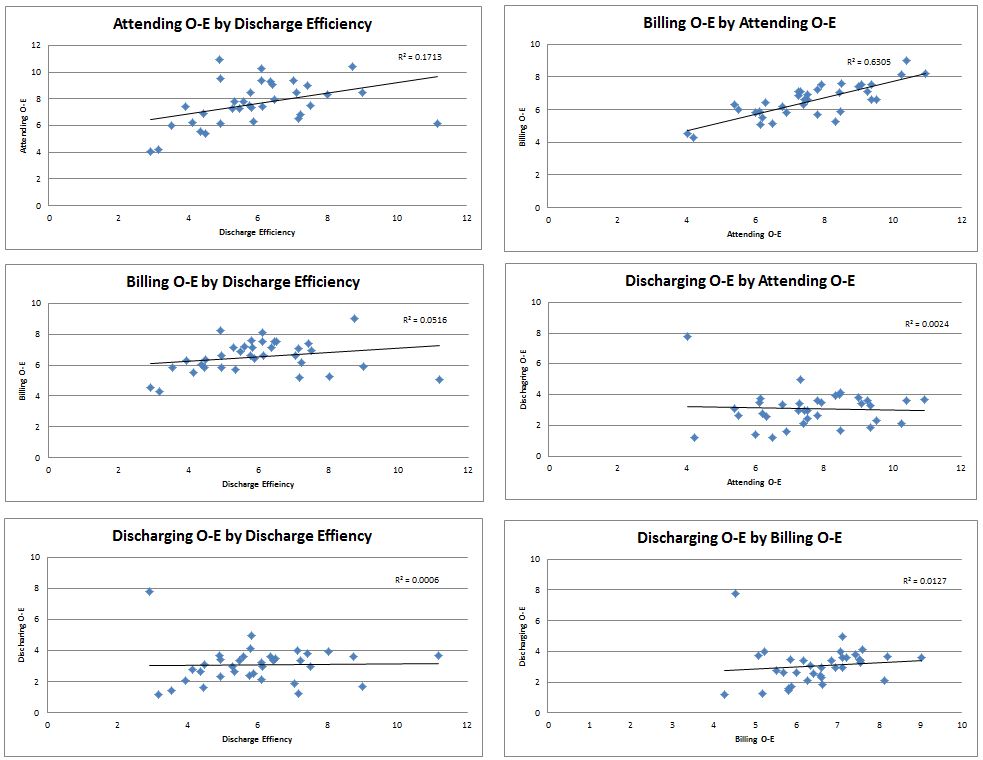

Results: A total of 35 providers, covering 3813 day shifts (Median 122, IQR 78.5-147.5) and 36,502 notes (Median 1185, IQR 742.5-1380.5), and 5,834 discharges (Median 176, IQR 137.5-200.5) were included in the analysis. DE values by provider create a normal distribution for the sample population (Figure 1). In comparison of attending, billing, discharging and DE, the only pairing that yielded a coefficient of determination >0.2 (Figure 1), was attending and billing (R2 = 0.63). The use of service specific adjustments to discharging LOS and DE yielded no significant difference in provider rank order for either metric.

Conclusions: Discharge efficiency is a simple metric of provider level LOS evaluation with a normal distribution in the study sample that can easily be obtained from patient note data and requires minimal computation. Service line adjustment of discharging provider LOS or DE yielded no significant change in provider ranks. Correlation between various provider LOS metrics is poor suggesting the need to utilize multiple measures to adequately capture hospitalist performance on this complex domain. Discharge efficiency provides one such easy-to-use metric.