Background: Immunosuppression regimens are highly effective at preventing rejection but carry risk of toxicity and can be complex. Clinical decision support (CDS) tools embedded in electronic health records have presented opportunity to systematically aid providers ordering such medications appropriately. However, discrepancy between a clinically-documented plan for use and provider orders may exist. Natural language processing (NLP) has been used to process, categorize and evaluate large unstructured data presented by clinical documentation, with prior studies showing potential in utilization in the medication reconciliation process. Generative Pre-trained Transformer (GPT) has shown great potential in understanding and generating human-like text, making it a valuable tool for refining the NLP algorithms used in our approach. By incorporating GPT in a sequential model system, we can improve the model’s ability to accurately identify discrepancies and reduce the number of false positives, thereby minimizing alert fatigue and increasing the likelihood that providers will act on the generated alerts.

Purpose: To improve patient safety and reduce medical errors in admitted patients receiving immunosuppression medications by detecting and alerting providers to discrepancies between documented plans for immunosuppression and their electronic orders in real-time.

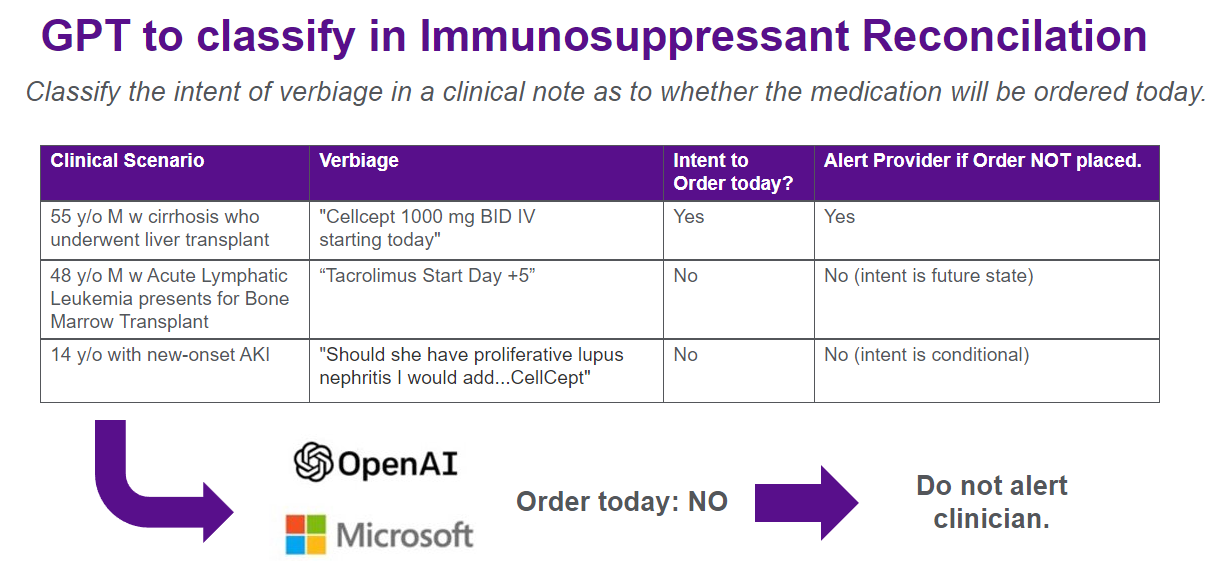

Description: The first patient-facing GPT-enabled CDS tool implemented at our academic medical center was developed between February and July 2023. Three key immunosuppressive medications (tacrolimus, sirolimus, and mycophenolate mofetil) were identified and served as the basis for an initial NLP model. A best practice alert (BPA) was designed with rules logic validated concurrently. (Figure A) Fast Healthcare Interoperability (FHIR) endpoints for the electronic health record were utilized for ‘live’ note extraction which would be parsed in real-time by the model. Generated fires were reviewed by development staff and a clinician to ensure accuracy with subsequent model adjustments made, including refinement of a GPT filter layer via prompt engineering to reduce false positives with future or conditional intent via in context learning. (Figure B) The model was integrated into live performance in the production environment of the electronic health record across all five hospitals with our system in July ’23. In prospective evaluation over the five months following, across over 44,000 admissions, inpatient notes were parsed resulting in 53 cases identified wherein regular expressions matching medications did not have corresponding electronic medication orders within 15 minutes of note publication. This was further refined via sequential GPT layer to classify intent, filtering 28 false positives resulting in 25 fires (~5 fires / month) alerting first contact providers to the potential discrepancy. Alerts are regularly reviewed by development team including a physician and an associated clinical champion for further analysis and quality improvement opportunity.

Conclusions: While rare, discrepancies between a documented clinical plan and immunosuppressant orders can occur. Utilizing a base NLP model enhanced by a GPT sequential layer, we were able to detect potential discrepancies to surface to providers for reconciliation. Next steps will include prospective evaluation of downstream outcomes of this alert including follow-up provider actions and effect on quality outcomes.