Background: In hospital medicine, around 250,000 diagnostic errors occur yearly in American hospitals and a significant proportion are attributed to failures in clinical reasoning. Feedback on the diagnostic process has been proposed as one method of improving clinical reasoning. However, in the current healthcare system barriers to the delivery and receipt of feedback include limited time and negative reactions to feedback. Given the shift towards asynchronous, digital communication and electronic learning modalities, it is possible that electronic feedback (“e-feedback”) could overcome these barriers.

Purpose: We developed an e-feedback system for hospitalists around episodes of care escalation (transfer to ICU, rapid responses) and sought to evaluate the program by answering two questions:1. How satisfied will hospitalists be with clinical reasoning e-feedback? 2. What commitment to change will hospitalists make following e-feedback?

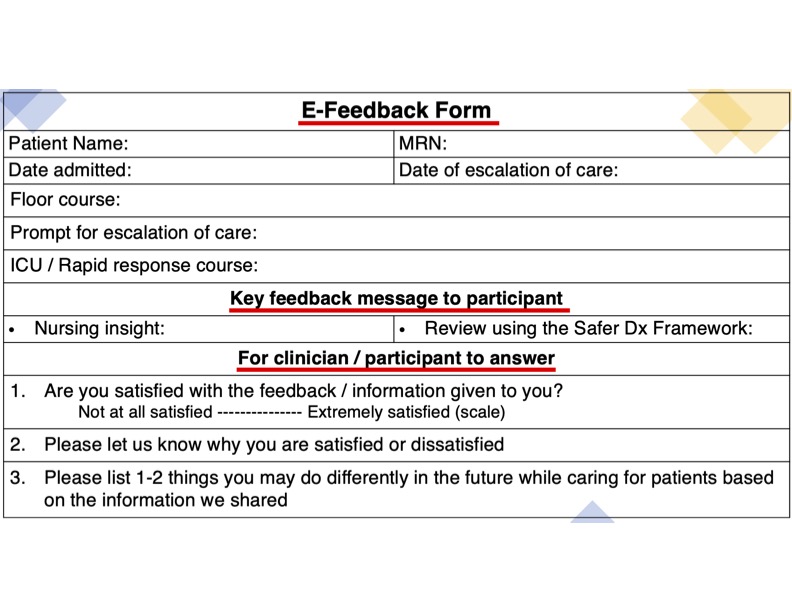

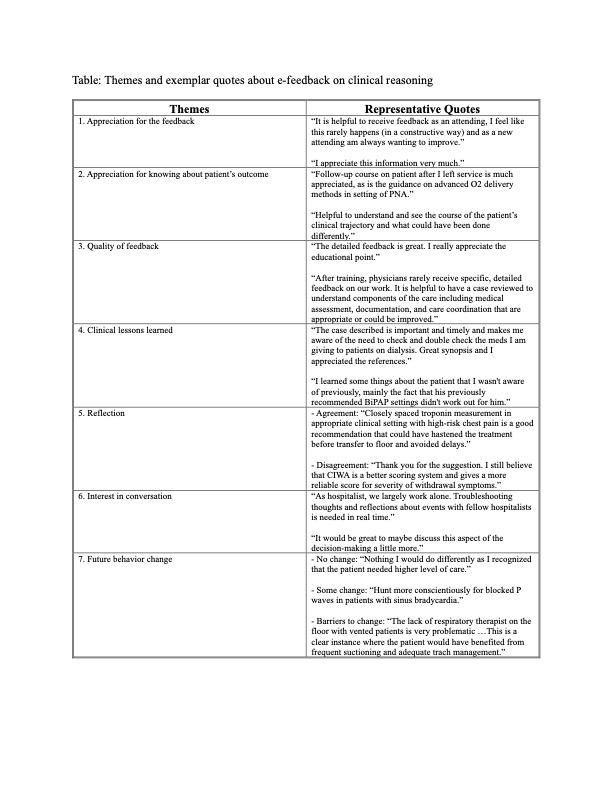

Description: Methods: We chose a qualitative study design based on a constructivist paradigm. This study was conducted at one academic medical center from February-June 2023. Participants were adult hospitalists, including physicians and advanced practice providers (APPs). A team consisting of two hospitalists, one senior internal medicine resident, and one clinical nurse reviewed escalations of care on the hospitalist service each week during the study period. Each patient’s escalation was reviewed using the Safer Dx framework; timely and confidential feedback was then emailed to the hospitalists involved in the patient’s care via REDCap. Hospitalists were able to respond to the e-feedback (figure); they were asked to rate their satisfaction on e-feedback, their reasons for satisfaction/dissatisfaction, and if they would modify their clinical practice based on the e-feedback. The open-ended text comments from the hospitalists were analyzed using a general thematic analysis framework. A preliminary codebook was created by reviewing 40% of the responses and was revised through further coding and discussion. Two coders independently applied codes to all open-ended responses; discrepancies were resolved to ensure coder agreement. Codes were aggregated into themes to tell a coherent story based on the data. Results: A total of 49/58 adult hospitalists agreed to participate. 78% were physicians and 22% were APPs. Out of 124 e-feedback surveys that were sent to the hospitalists, 105 were returned (response rate 85%). 70 (67%) feedback surveys were rated highly satisfied, 24 (23%) feedback surveys were rated moderately satisfied, and 11 (10%) feedback surveys were rated not satisfied. Thematic analysis of open-ended responses with representative quotes are shown in table.

Conclusions: Hospitalists appreciated the e-feedback process, reflected on the diagnostic process, and identified future behavior changes. Further studies are needed to see whether e-feedback enhances the clinical reasoning of hospitalists.